This is work in progress

Wednesday, December 23, 2015

Friday, December 18, 2015

Houston Hadoop Meetup - Going from Hadoop to Spark

This time Mark Kerzner, the organizer, presented "Going from Hadoop to Spark" - but with a slant on basics. It worked, more than half of the audience were there for the first time, and they got an introduction into Hadoop just as into Spark.

It may have worked too well: many people present wanted to bypass Hadoop altogether and start their learning from Spark. Don't. Start with a Hadoop overview, you can get our free book here, and good Spark training contents is available at DataBricks, the company behind Spark. You might also want the introduction to "Spark Illuminated", the book we are currently writing.

The slides from the presentation can be found here.

Thank you and see you next time.

PS. The new meeting place at the Slalom office in Houston was fantastic, we plan to stay there - thanks.

It may have worked too well: many people present wanted to bypass Hadoop altogether and start their learning from Spark. Don't. Start with a Hadoop overview, you can get our free book here, and good Spark training contents is available at DataBricks, the company behind Spark. You might also want the introduction to "Spark Illuminated", the book we are currently writing.

The slides from the presentation can be found here.

Thank you and see you next time.

PS. The new meeting place at the Slalom office in Houston was fantastic, we plan to stay there - thanks.

Wednesday, December 9, 2015

Data Analytics at the Memex DARPA program with ASPOSE

I am a fan of open source. At DARPA, I work with open source technologies and create more open source as a result. However, when I had to extract information from a PDF police report, I ran into problems for this type of PDF. Here is a fragment of my document.

Now, you can easily see that the document easily breaks into (field, value) pairs. However, if you copy/paste the text, you get this:

Report no.:

Occurrence Type:

Occurrence time:

Reported time:

Place of offence:

Clearance status:

Concluded:

Concluded date:

Summary:

Remarks:

20131 234567

Impaired Operation/over 80 mg% of Motor Vehicle 253(1)(a)/(b) CC

2013/08/08 20:10 -

2013/08/08 20:10

1072 102 STREET, NORTH BATTLEFORD, SK Canada (CROWN CAB) (Div: F,

Dist: CENTRAL, Det: Battleford Municipal, Zone: BFD, Atom: C)

Cleared by charge/charge recommended

Yes

2013/08/29

Cst. SMITH

As you can see, the formatting is not preserved, and it becomes very hard to parse. I tried 'save as text' and I tried Tika, and I tried PdfBox, and I also asked the Tika people. The result is the same: I get all the text but not the formatting.

Well, comes in Aspose. Close source and with a price tag. But you know what? It is the only one that does the job and gives me the text output in the same format as PDF was.

Well, comes in Aspose. Close source and with a price tag. But you know what? It is the only one that does the job and gives me the text output in the same format as PDF was.

Here is the code I had to use

private void initAsposeLicense() {

com.aspose.pdf.License license = new com.aspose.pdf.License();

try {

// ClassLoader classLoader = getClass().getClassLoader();

// File file = new File(classLoader.getResource("Aspose.Pdf.lic").getFile());

// InputStream licenseStream = new FileInputStream(file);

// license.setLicense(licenseStream);

license.setLicense("Aspose.Pdf.lic");

} catch (Exception e) {

logger.error("Aspose license problem", e);

}

}

As you can see, I tried to stream the license in. It would be better to distributed to whole jar, but it did not work for some reason. Well, keeping the license outside may be better, since you can replace it. So I just read it from the executable location folder.

Extracting the text was also extremely easy

private String extractWithAspose(File file) throws IOException {

// Open document

com.aspose.pdf.Document pdfDocument = new com.aspose.pdf.Document(file.getPath());

// Create TextAbsorber object to extract text

com.aspose.pdf.TextAbsorber textAbsorber = new com.aspose.pdf.TextAbsorber();

// Accept the absorber for all the pages

pdfDocument.getPages().accept(textAbsorber);

// Get the extracted text

String extractedText = textAbsorber.getText();

// System.out.println("extractedText=\n" + extractedText);

return extractedText;

}

So now I can create a spreadsheet of fields/values for the whole document corpus:

Report no.:|Occurrence Type:|Occurrence time:|Reported time:|Place of offence:|Clearance status:|Concluded:|Concluded date:|Summary:|Remarks:|Associated occurrences:|Involved persons:|Involved addresses:|Involved comm addresses:|Involved vehicles:|Involved officers:|Involved property:|Modus operandi:|Reports:|Supplementary report:

"20131234567"|

Now I can happily proceed with my text analytics tasks.

Now, you can easily see that the document easily breaks into (field, value) pairs. However, if you copy/paste the text, you get this:

Report no.:

Occurrence Type:

Occurrence time:

Reported time:

Place of offence:

Clearance status:

Concluded:

Concluded date:

Summary:

Remarks:

20131 234567

Impaired Operation/over 80 mg% of Motor Vehicle 253(1)(a)/(b) CC

2013/08/08 20:10 -

2013/08/08 20:10

1072 102 STREET, NORTH BATTLEFORD, SK Canada (CROWN CAB) (Div: F,

Dist: CENTRAL, Det: Battleford Municipal, Zone: BFD, Atom: C)

Cleared by charge/charge recommended

Yes

2013/08/29

Cst. SMITH

As you can see, the formatting is not preserved, and it becomes very hard to parse. I tried 'save as text' and I tried Tika, and I tried PdfBox, and I also asked the Tika people. The result is the same: I get all the text but not the formatting.

Well, comes in Aspose. Close source and with a price tag. But you know what? It is the only one that does the job and gives me the text output in the same format as PDF was.

Well, comes in Aspose. Close source and with a price tag. But you know what? It is the only one that does the job and gives me the text output in the same format as PDF was.Here is the code I had to use

private void initAsposeLicense() {

com.aspose.pdf.License license = new com.aspose.pdf.License();

try {

// ClassLoader classLoader = getClass().getClassLoader();

// File file = new File(classLoader.getResource("Aspose.Pdf.lic").getFile());

// InputStream licenseStream = new FileInputStream(file);

// license.setLicense(licenseStream);

license.setLicense("Aspose.Pdf.lic");

} catch (Exception e) {

logger.error("Aspose license problem", e);

}

}

As you can see, I tried to stream the license in. It would be better to distributed to whole jar, but it did not work for some reason. Well, keeping the license outside may be better, since you can replace it. So I just read it from the executable location folder.

Extracting the text was also extremely easy

private String extractWithAspose(File file) throws IOException {

// Open document

com.aspose.pdf.Document pdfDocument = new com.aspose.pdf.Document(file.getPath());

// Create TextAbsorber object to extract text

com.aspose.pdf.TextAbsorber textAbsorber = new com.aspose.pdf.TextAbsorber();

// Accept the absorber for all the pages

pdfDocument.getPages().accept(textAbsorber);

// Get the extracted text

String extractedText = textAbsorber.getText();

// System.out.println("extractedText=\n" + extractedText);

return extractedText;

}

So now I can create a spreadsheet of fields/values for the whole document corpus:

Report no.:|Occurrence Type:|Occurrence time:|Reported time:|Place of offence:|Clearance status:|Concluded:|Concluded date:|Summary:|Remarks:|Associated occurrences:|Involved persons:|Involved addresses:|Involved comm addresses:|Involved vehicles:|Involved officers:|Involved property:|Modus operandi:|Reports:|Supplementary report:

"20131234567"|

Now I can happily proceed with my text analytics tasks.

Tuesday, November 24, 2015

Thanksgiving in the Big Data Land

There are a few ways how it could work out in the world of Big Data

- The traditional Hadoop elephant serves the traditional turkey.

- Two vegetarians, turkey and elephant, eat the traditional pumpkin pie.

- Nobody eats anybody at all, in the style of Alice in Wonderland with the pudding (if you remember, Alice was introduced to pudding, and it is not etiquette to cut a piece of someone after being introduced to it).

Tuesday, October 27, 2015

Hadoop and Spark cartoon

Our artist outdid herself with this cartoon, this is totally hilarious.

We do teach a lot of Spark courses lately though.

We do teach a lot of Spark courses lately though.

Sunday, October 11, 2015

Learning Scala by Example

I have started a blog series, "Learning Scala by Example" using the excellent latest book of the same name by Martin Odersky.

So far I have three blog posts,

Following posts will appear regularly, one by one. Watch here.

Enjoy the challenge and write back!

So far I have three blog posts,

- Intro (why you should study Scala)

- Setting your working environment, and

- Getting to business with expressions and functions.

Following posts will appear regularly, one by one. Watch here.

Enjoy the challenge and write back!

Wednesday, September 16, 2015

Houston Hadoop Meetup - David Ramirez presents Informatica

At the recent meeting, David Ramirez of Informatica presented the platform and explained how it allows to save time in data modeling when it comes to Big Data. His slides are found here, and they also contain a link to a demo on YouTube.

Keep in mind that David is working with Oil & Gas accounts in Houston - so you may get some interesting information from the slides.

Pizza was provided by Elephant Scale, and the meeting hosted by Microsoft. Thanks to Tiru for helping with hosting.

Keep in mind that David is working with Oil & Gas accounts in Houston - so you may get some interesting information from the slides.

Pizza was provided by Elephant Scale, and the meeting hosted by Microsoft. Thanks to Tiru for helping with hosting.

Thursday, September 3, 2015

Big Data Cartoon - Hadoop is quite mature

Signs of Hadoop maturity:

- There is a competitor, Spark

- There is consolidation: Cloudera, Hortonworks, MapR dominate

- Promising startups are snatched: Hortonwork acquired Onyara, the maker of NiFi

- The elephant himself grew up - see this picture :)

Thursday, August 20, 2015

Mike Drob presents Cloudera Search at Houston Hadoop Meetup

Cloudera's value add an open source, feature rich search engine plus all the integrations with a data management (also open source) ecosystem, to streamline multi-workload search, or search and other workloads of the same data, without moving it around between systems. Cloudera also provides production tooling, audit, and security.

In addition, open source buffs can use the implementation described in these slides, to glean the best practices to use in their own solutions.

Very good, clear discussion - thank you, Mike!

Thanks again, Microsoft, for hosting the meetup at MS Campus.

Wednesday, July 22, 2015

Review of “Monitoring Hadoop” by Gurmukh Singh

This book is recently published, April 2015, and it covers Nagios, Ganglia, Hadoop monitoring and monitoring best practices.

The first part is rightfully devoted to Nagios. Nagios is covered quite in depth: install, verification and configuration. It gives you the right balance: it does not say everything that there is in a Nagios manual, but tells you sufficient information to install Nagios and prepare it to monitor specific Hadoop daemons, ports, and hardware.

The same goes for Ganglia: it is covered in sufficient detail for one to be able to install and run, with enough attention to Hadoop specifics.

What I did not find in the book, and what could be useful... to read further

Review of “Hadoop in Action,” second edition

Four years have passed since the first publication, and as Russians say, “A lot of water has passed (under the bridge) since then,” so let’s look at what’s new in this edition.

Tuesday, July 7, 2015

The power of text analytics at DARPA/Memex

One of the things we are doing in the DARPA Memex program is text analytics. One of the outcomes of it is an open source project called MemexGATE.

By itself, GATE stands for Generic Architecture for Text Engineering, and it is a mature and widely-used tool. It is up to you to create something useful with GATE, and MemexGATE is our first step. This is an application configured to understand court documents. It will detect people mentioned in the documents, dates, places, and many more characteristics that take you beyond plain key word searches.

By itself, GATE stands for Generic Architecture for Text Engineering, and it is a mature and widely-used tool. It is up to you to create something useful with GATE, and MemexGATE is our first step. This is an application configured to understand court documents. It will detect people mentioned in the documents, dates, places, and many more characteristics that take you beyond plain key word searches.

To achieve this, GATE combines processing pipelines (such as sentence splitter, language-specific word tokenizer, part of speech tagger, etc) with gazetteers. Now, what is a gazetteer? -- It is a list of people, places etc. that can occur in your documents. MemexGATE includes scripts that collect all US judges, for example, so that they can be detected, when found in a document.

But MemexGATE does more: it is scalable. Building on the Behemot framework, it can parallelise processing for the Hadoop cluster, thus putting no limit on the size of the corpus. MemexGATE was designed and implemented by Jet Propulsion Lab team, and the project committer is Lewis McGibbney.

The picture shown above gives an example of a processed document (from NY court of appeals), with specific finds color-coded. In this way, we process more than 100,000 documents. Why is this useful for us at Memex? - Because we are trying to find and parse court documents related to labor trafficking, so that we can analyze them and better understand the indicators of labor trafficking.

It is very exciting to work on the Memex program. Our team is called "Hyperion Gray" and has been featured in Forbes lately.

What's next? One of the plans is to add understanding of documents to FreeEed, the open source eDiscovery. Instead of just doing keyword searches through the document, the lawyers will be able, by the addition of text analytics, make more sense of the documents: detect people, dates, organizations, etc. This will in turn help create the picture of the case in an automated way.

Disclaimer: we are not official speakers for Memex.

.

By itself, GATE stands for Generic Architecture for Text Engineering, and it is a mature and widely-used tool. It is up to you to create something useful with GATE, and MemexGATE is our first step. This is an application configured to understand court documents. It will detect people mentioned in the documents, dates, places, and many more characteristics that take you beyond plain key word searches.

By itself, GATE stands for Generic Architecture for Text Engineering, and it is a mature and widely-used tool. It is up to you to create something useful with GATE, and MemexGATE is our first step. This is an application configured to understand court documents. It will detect people mentioned in the documents, dates, places, and many more characteristics that take you beyond plain key word searches.To achieve this, GATE combines processing pipelines (such as sentence splitter, language-specific word tokenizer, part of speech tagger, etc) with gazetteers. Now, what is a gazetteer? -- It is a list of people, places etc. that can occur in your documents. MemexGATE includes scripts that collect all US judges, for example, so that they can be detected, when found in a document.

But MemexGATE does more: it is scalable. Building on the Behemot framework, it can parallelise processing for the Hadoop cluster, thus putting no limit on the size of the corpus. MemexGATE was designed and implemented by Jet Propulsion Lab team, and the project committer is Lewis McGibbney.

The picture shown above gives an example of a processed document (from NY court of appeals), with specific finds color-coded. In this way, we process more than 100,000 documents. Why is this useful for us at Memex? - Because we are trying to find and parse court documents related to labor trafficking, so that we can analyze them and better understand the indicators of labor trafficking.

It is very exciting to work on the Memex program. Our team is called "Hyperion Gray" and has been featured in Forbes lately.

What's next? One of the plans is to add understanding of documents to FreeEed, the open source eDiscovery. Instead of just doing keyword searches through the document, the lawyers will be able, by the addition of text analytics, make more sense of the documents: detect people, dates, organizations, etc. This will in turn help create the picture of the case in an automated way.

Disclaimer: we are not official speakers for Memex.

.

Friday, July 3, 2015

Big Data Cartoons - Summer of Big Data

Wednesday, June 10, 2015

Joe Witt of Onyara presented Apache NiFi

Joe Witt and the team of Onyara came to present Apache Nifi at Houston Hadoop Meetup. The NiFi project is the result of eight years of development at NSA, which has been open sourced in November of 2014.

The project is for automating enterprise dataflows, and its salient use cases are

The project is for automating enterprise dataflows, and its salient use cases are

- Remote sensor delivery

- Inter-site/global distribution

- Intra-site distribution

- "Big Data" ingest

- Data Processing (enrichment, filtering, sanitization)

For the rest, in the words of Shakespeare

"Let Lion, Moonshine, Wall, and lovers twain

At large discourse, while here they do remain."

Meaning, in our case, here are the slides, courteously provided by Joe.

Oh, and there WAS a live demo, so those who missed it - missed it.

As always, pizza was provided by Elephant Scale LLC, Big Data training and consulting.

Monday, June 8, 2015

Big Data Cartoon - Summer Fun

Summer is the time to have fun and to get some rest! While their moms and dads are presumably coding away some new Big Data app, their kids can go to the summer camp. So did our Big Data cartoonist, who is now working as a summer camp artistic director. (These "cartoons" are really the large size decorations there.)

But you can see the same themes, albeit hidden: the tiger is no doubt the new elephant, and the magicians are the software engineers.

Thursday, June 4, 2015

Review of "Apache Flume" by Steve Hoffman (Packt)

This is a second

edition of the Apache Flume book, and it covers the latest Flume

version 5.2. The author works at Orbitz, so he can draw on a lot of

practical Big Data experience.

The intro chapter

takes you through the history, versions, requirements, and the

install and sample run of Flume. The author gives you the information

on useful undocumented options and takes you to the cutting edge with

submitting new requests to the Flume team (using has request as an

example).

That should be

enough, but the justification for the existence of the book and all

the additional architectural options with Flume are this: real life

will give you data collection troubles you never before though of.

There will be memory and storage limitation on any node where you

would install Flume, and that is why your real-world architectures

will be multi-tiered, with part of the system being down for

significant lengths of time. This is where more knowledge will be

required.

Channel and sinks

get their own individual chapters. You will learn about file rotation

and data compressions and serialization mechanisms (such as Avro) to

be used in Flume. Load balancing and failover descriptions will help

you create robust data collection.

Flume can collect

data from a variety of sources, and chapter five describes them, with

a lot of in-the-know information and best practices and potential

gotchas.

Interceptors (and in

particular the Morphline interceptor) are a less known, but very

powerful libraries to improve your data flows in Flume. They are a

part of KiteSDK.

Chapter seven,

“Putting it all together” leads you through a practical example

of collecting the data and storing it in ElasticSearch, under

specific Service Level Agreements (SLA), and the setting up Kibana

for viewing the results.

The chapter on

monitoring is useful because monitoring, while important, is as yet

not complete in Flume, and the more up-do-date information on it you

can get, the better – to avoid flying the dark. Imagine someone

telling you that you've been loosing data for the month, and that

parts of your system were not working, unbeknownst st to you. To

avoid this, use monitoring!

The last chapter

gives advice on deploying Flume in multiple data centers and on the

“evils” of time zones.

All in all, a must

for anyone needing data collection skills in Big Data and Flume.

Friday, May 8, 2015

Big Data Cartoon: Spark workshop

So what is Apache Spark workshop? You would imagine that sparks are flying off the participants and, at least in the eyes of our illustrator, this is absolutely true. By the way, Elephant Scale will be teaching a one-day workshop soon, in Dallas, TX, so stay tuned.

(What is the inspiration behind this drawing? It Rembrandt's - genius painting but gruesome subject - you have been warned - here.)

(What is the inspiration behind this drawing? It Rembrandt's - genius painting but gruesome subject - you have been warned - here.)

Wednesday, April 29, 2015

I am a reviewer on "Real-time Analytics with Storm and Cassandra"

- Create your own data processing topology and implement it in various real-time scenarios using Storm and Cassandra

- Build highly available and linearly scalable applications using Storm and Cassandra that will process voluminous data at lightning speed

- A pragmatic and example-oriented guide to implement various applications built with Storm and Cassandra

Tuesday, April 28, 2015

Big Data Cartoon - In the Big Small world

Working with Big Data, you are also working across the globe. As Sujee Maniyam puts it in his presentation on Launching your career in Big Data: "If you think of a nine-to-five job - forget it!" You are working with multiple teams at all hours. The reward, in the words of Shakespeare

Working with Big Data, you are also working across the globe. As Sujee Maniyam puts it in his presentation on Launching your career in Big Data: "If you think of a nine-to-five job - forget it!" You are working with multiple teams at all hours. The reward, in the words of ShakespeareWhy, then the world's mine oyster.

Many people quote this, but in the play it's better. Falstaff fires Pistol, his servant, and refuses to lend him money. Pistol decides to fend for himself. Fastaff is being witty, at his own expense: Pistol will betray him.

Enter FALSTAFF and PISTOLFALSTAFF

I will not lend thee a penny.PISTOL

Why, then the world's mine oyster.FALSTAFF

Which I with sword will open.

Not a penny. I have been content, sir, you should

lay my countenance to pawn...

Wednesday, April 8, 2015

I am a reviewer on "Learning Apache Cassandra"

I am a reviewer on the new Packt Cassandra book.

What You Will Learn

- Install Cassandra and create your first keyspace

- Choose the right table structure for the task at hand in a variety of scenarios

- Use range slice queries for efficient data access

- Effortlessly handle concurrent updates with collection columns

- Ensure data integrity with lightweight transactions and logged batches

- Understand eventual consistency and use the right consistency level for your situation

- Implement best practices for data modeling and access

Wednesday, March 25, 2015

Jim Scott on Zeta architecture at Houston Hadoop Meetup

Jim Scott dropped in on his trip from Chicago to Houston, and presented his Zeta architecture. Here are the major points

Jim Scott dropped in on his trip from Chicago to Houston, and presented his Zeta architecture. Here are the major points- Zeta architecture is nothing new, Jim has just created pretty diagrams and popularizes it

- It is really the Google architecture, except that Google will not confirm or deny it

- It is the last generic Big Data architecture that you will need, and it solves the following problems:

- Provide high server utilization. Here's why this is important

- Allow to scale from test to stage to production environment without re-configuring the system and without re-importing the data

Not convinced yet? Here are the slides of the presentation (provided promptly on the day of the meetup)

Thanks to Microsoft for the awesome (and very spacious) meeting room, and our task now is to fill it - so please, Meetup members in Houston, invite your friends. Remember, pizza from Saba's is always provided.

Thanks to Microsoft for the awesome (and very spacious) meeting room, and our task now is to fill it - so please, Meetup members in Houston, invite your friends. Remember, pizza from Saba's is always provided. Wednesday, March 11, 2015

Ravi Mutyala of Hortonworks talks about Stinger.next.

The Stinger Initiative enabled Hive to support an even broader range of use cases at truly Big Data scale: bringing it beyond its Batch roots to support interactive queries – all with a common SQL access layer.

Stinger.next is a continuation of this initiative focused on even further enhancing the speed, scale and breadth of SQL support to enable truly real-time access in Hive while also bringing support for transactional capabilities. And just as the original Stinger initiative did, this will be addressed through a familiar three-phase delivery schedule and developed completely in the open Apache Hive community.

Ravi talked about some of the changes that came in Stinger and ones that are in progress in Stinger.next

This was our first meeting at the MS Office on Beltway 8, and it rocks! Thank you, Jason, for arranging this, and also for showing some of the Azure stuff at the end :)

Stinger.next is a continuation of this initiative focused on even further enhancing the speed, scale and breadth of SQL support to enable truly real-time access in Hive while also bringing support for transactional capabilities. And just as the original Stinger initiative did, this will be addressed through a familiar three-phase delivery schedule and developed completely in the open Apache Hive community.

Ravi talked about some of the changes that came in Stinger and ones that are in progress in Stinger.next

This was our first meeting at the MS Office on Beltway 8, and it rocks! Thank you, Jason, for arranging this, and also for showing some of the Azure stuff at the end :)

Sunday, March 8, 2015

How to add a hard drive to HDFS on AWS

Imagine you need to add more space to your HDFS cluster that is running on Amazon EC2. Here are the simple steps you need to take

1. Add a volume in AWS EC2 console. Make sure that the volume is in the same zone as your instance, such as us-east-1c

2. Attach the volume to the instance: right click on the volume and choose "Attach Volume".

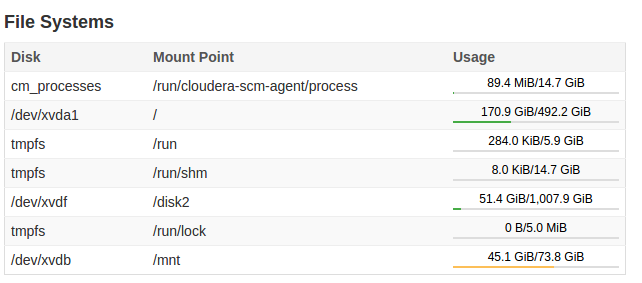

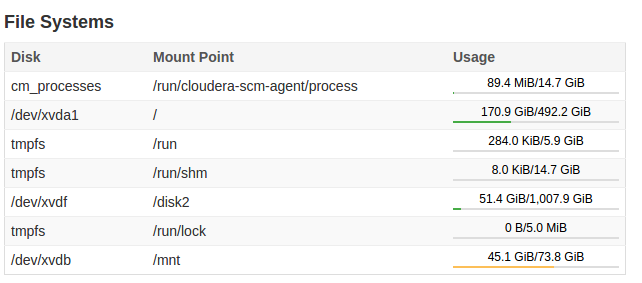

3. Make the volume available for use by formatting the hard drive, commands are here. Now you see the new volume (in my case I mounted 1 TB of space as /disk2)

4. Add this drive as one of those that HDFS should use. I have added the directory for the datanode's use as below

5. Presto! You get much more space. Repeat to taste :)

1. Add a volume in AWS EC2 console. Make sure that the volume is in the same zone as your instance, such as us-east-1c

2. Attach the volume to the instance: right click on the volume and choose "Attach Volume".

3. Make the volume available for use by formatting the hard drive, commands are here. Now you see the new volume (in my case I mounted 1 TB of space as /disk2)

4. Add this drive as one of those that HDFS should use. I have added the directory for the datanode's use as below

5. Presto! You get much more space. Repeat to taste :)

Tuesday, February 24, 2015

Big Data Cartoon - What's with Pivotal?

Last week, Pivotal joined its forces with its former rival, Hortonworks, announcing that they will form a join Hadoop Core platform. In my understanding, Pivotal is giving up its own distribution of Hadoop in favor of Hortonworks Data Platform.

Last week, Pivotal joined its forces with its former rival, Hortonworks, announcing that they will form a join Hadoop Core platform. In my understanding, Pivotal is giving up its own distribution of Hadoop in favor of Hortonworks Data Platform.However, Yevgeniy Sverglik on DataCentralKnowledge quotes Cloudera and MapR as saying that there is no need for another Hadoop Core, and that this is about marketing with self serving interests.

Who is right? Our illustrator ponders.

Monday, February 23, 2015

FreeEed technologies led to DARPA project

FreeEed technologies impressed the DARPA team and led to a contract to fight human trafficking. The full press release by the main contractor, Hyperion Gray, is quoted below. While FreeEed and Elephant Scale can't have their own press release, their involvement is fully explained.

Wednesday, February 18, 2015

My lab

Tuesday, February 17, 2015

HBase Design Patterns by Mark Kerzner

The "HBase Design Patterns" book was presented by Mark Kerzner, one of the authors. Since the Houston Hadoop Meetup group is usually mixed, Mark started with HBase overview, and continued with a deeper dive into the book's content.

But the Big Data landscape in Houston is maturing, and this time about half of the group were Big Data professionals. Way to go!

But the Big Data landscape in Houston is maturing, and this time about half of the group were Big Data professionals. Way to go!

Wednesday, February 11, 2015

Big Data Cartoon - Elephant gets a credit card

You may not be certain that elephants get credit cards, but one thing is for sure - credit cards get Apache Hadoop in a big way. American Express is all on it, and it sucked up all available developers, I think. VISA has great fraud identification based on Big Data. It seemed only fair to our illustrator to provide the elephant with one card at least.

You may not be certain that elephants get credit cards, but one thing is for sure - credit cards get Apache Hadoop in a big way. American Express is all on it, and it sucked up all available developers, I think. VISA has great fraud identification based on Big Data. It seemed only fair to our illustrator to provide the elephant with one card at least.

Tuesday, February 3, 2015

Big Data Cartoon - help for cats and dogs

Encouraged by the success of our "Sparklet™ #1", we decided to merge our Big Data cartoons and supply some information with it, preferably about Spark, but also about some other interesting news in the area of Big Data. So this is Sparklet #2.

Encouraged by the success of our "Sparklet™ #1", we decided to merge our Big Data cartoons and supply some information with it, preferably about Spark, but also about some other interesting news in the area of Big Data. So this is Sparklet #2.Big Data helps cats and dogs. Trupanion Inc. (NYSE: TRUP) is a direct-to-consumer monthly subscription business that provides medical insurance for cats and dogs. They customize the insurance on the individual basis, using Big Data - see here how they do it.

Another interesting cat-like project is Tigon, and it is a serious tiger - it is an open source low-latency streaming technology, based on Hadoop, HBase, and such thrills as Tephra and Apache Twill.

Tuesday, January 27, 2015

Sparklets

Here is a fitting illustration for the post by Sujee Maniyam on "Understanding Spark Caching".

Sunday, January 18, 2015

Readers would be pleased to know that we have teamed up with Packt Publishing to organize a Giveaway of our book HBase Design Patterns.

Three lucky winners stand a chance to win e-copy of the book. Keep reading to find out how you can be one of the Lucky One.

Overview:

How to Enter?

All you need to do is head on over to the book page and look through the product description of the book and drop a line via the comments below this post to let us know what interests you the most about this book. It’s that simple.

Winners will get an e-copy of the Book.

Deadline

The contest will close on February 1, 2015. Winners will be contacted by email, so be sure to use your real email address when you comment!

Three lucky winners stand a chance to win e-copy of the book. Keep reading to find out how you can be one of the Lucky One.

Overview:

- Design HBase schemas for the most demanding functional and scalability requirements

- Optimize HBase's handling of single entities, time series, large files, and complex events by utilizing design patterns

- Written in an easy-to-follow style, and incorporating plenty of examples, and numerous hints and tips.

How to Enter?

All you need to do is head on over to the book page and look through the product description of the book and drop a line via the comments below this post to let us know what interests you the most about this book. It’s that simple.

Winners will get an e-copy of the Book.

Deadline

The contest will close on February 1, 2015. Winners will be contacted by email, so be sure to use your real email address when you comment!

Subscribe to:

Comments (Atom)